I've been working up some demos for Project Cooper to show it working well with the Java class libraries and with the Android SDK. Most recently been trying my hand at getting some OpenGL demos going. I've briefly bumped into OpenGL over the years, but have by no means become a dab hand at navigating the mass of calls in the library and so have been finding the OpenGL documentation invaluable. To help me get something working I've been following steps in books and looking at demos found online.

One demo in a book I have involves a spinning cube (a popular type of demo), that has an image used to texture each side of the cube, and also makes the cube transparent. It's a nice looking demo for sure. I cobbled the code into a Project Cooper application and tested it out and was left very puzzled. You see, the demo ran just fine on the Android emulator. But on my shiny HTC Desire the cube had no bitmap texture on it - the cube was bare!

So the challenge was to find out why a well-performing physical Android device was beaten by the meagre emulator. The solution was found using logging, documentation, the Android source and some general web searching.

The first thing to do was ensure the OpenGL code was emitting as much debug information as possible so I turned on the debug flags:

view.DebugFlags := GLSurfaceView.DEBUG_CHECK_GL_ERROR or GLSurfaceView.DEBUG_LOG_GL_CALLS;

Now, I should point out here that I realise this doesn't look right as far as Android programming goes. In Java you'd write it more like:

view.setDebugFlags(GLSurfaceView.DEBUG_CHECK_GL_ERROR | GLSurfaceView.DEBUG_LOG_GL_CALLS);

There's a couple of things to say on this front. Firstly, Project Cooper uses a Pascal/Delphi-like language and so bitwise OR operations are done with or. More importantly, though, is that Delphi and C# programmers are used to properties, a notion that is completely absent in Java. In the Android SDK there are many methods in the form setFoo() and getFoo() and these essentially represent getter and setter methods for a Foo property. Project Cooper spots these and fabricates a Foo property and gives you the option of using the original methods or using the property instead. For some of us properties are a natural thing and Project Cooper keeps us happy by adding them into code that doesn't explicitly define them.

Back to the story...

After checking the log output in DDMS and seeing where the error came up in amongst all the various OpenGL calls I narrowed the issue down to where the bitmap was being loaded and applied as a texture:

var bmp := BitmapFactory.decodeResource(ctx.Resources, resource);

GLUtils.texImage2D(GL10.GL_TEXTURE_2D, 0, bmp, 0);

Log.e(Tag, 'OpenGL error: ' + gl.glGetError);

gl.glTexParameterf(GL10.GL_TEXTURE_2D, GL10.GL_TEXTURE_MIN_FILTER, GL10.GL_LINEAR);

gl.glTexParameterf(GL10.GL_TEXTURE_2D, GL10.GL_TEXTURE_MAG_FILTER, GL10.GL_LINEAR);

bmp.recycle()

The Log call was giving out an error value of 1281, which according to the documentation is GL_INVALID_VALUE. This seemed odd as the code was pretty much lifted straight from another example, along with the image file. I figured I'd better get more information about the bitmap from the code. Having looked at what GLUtils.texImage2D() does, which is to take the bitmap, check its pixel layout (the bitmap type) and pixel type (internal format) and extract the data into a buffer, passing the results onto GL10.glTextImage2D() (which is a Java wrapper over glTextImage2D()), I used these log calls (again taking advantage of the implied properties Project Cooper adds):

Log.I('GLCube', 'Bmp = ' + bmp.Width + ' x ' + bmp.Height);

Log.I('GLCube', 'Type/Internal format = ' + GLUtils.Type[bmp] + ' / ' + GLUtils.InternalFormat[bmp]);

This produced DDMS output on the emulator of:

INFO/GLCube(953): Bmp = 128 x 128 INFO/GLCube(953): Type/Internal format = 33635 / 6407

Again, checking the values of these constants in the documentation told me the type was GL10.GL_UNSIGNED_SHORT_5_6_5 and the internal format was GL10.GL_RGB. An interesting discrepancy showed up on the real device, though, as it gave this output:

INFO/GLCube(3278): Bmp = 192 x 192 INFO/GLCube(3278): Type/Internal format = 33635 / 6407

Hmm, same image file, which is a 128x128 PNG file, but it gets inflated in size by 50% on the real device. Time for some research.

The first thing I did was to find some similar OpenGL code in the Android SDK's APIDemos. They have a TriangleRenderer class, which does a similar texture load in its onSurfaceCreated() method. However they seem to have avoided the problem entirely by reading the resource raw via an InputStream, before involving the BitmapFactory:

InputStream is = mContext.getResources().openRawResource(R.raw.robot);

Bitmap bitmap;

try {

bitmap = BitmapFactory.decodeStream(is);

} finally {

try {

is.close();

} catch(IOException e) {

// Ignore.

}

}

GLUtils.texImage2D(GL10.GL_TEXTURE_2D, 0, bitmap, 0);

bitmap.recycle();

Sure enough, when I switched to equivalent code the problem disappeared and I had a nicely rendered image on all sides of my cube. So the device *could* work with the image, but by using decodeStream() instead of decodeResource().

Further searching informed me that some devices are unable to use textures that do not have a width and height that are a power of 2. On the emulator, the bitmap from the BitmapFactory is 128x128 and so satisfies the 'power of 2' requirement. Clearly on the device with the bitmap inflated to 192x192 the requirement is not met.

Apparently the capability to use textures that are sized as not-power-of-two (or NPOT textures) can be determined by checking the OpenGL extensions string for the presence of the text GL_ARB_texture_non_power_of_two. So something equivalent to:

if gl.glGetString(GL10.GL_EXTENSIONS).contains('GL_ARB_texture_non_power_of_two') then

Log.i(Tag, 'NPOT texture extension found :-)')

else

Log.i(Tag, 'NPOT texture extension not found :-(');

I must admit to feeling a bit of disappointment after confirming that my HTC Desire does not have this OpenGL extension only to find that the Android emulator does.

Ok, so my telephone does not support NPOT textures, but the image in the Android project is 128x128 so why does it get scaled up to 192 when loaded via BitmapFactory.decodeResource()? Well, looking at the documentation a call to BitmapFactory.decodeResource(ctx.Resources, resource) results in a call to BitmapFactory.decodeResourceStream() (as opposed to the earlier BitmapFactory.decodeStream()) with a nil (or null) BitmapFactory.Options passed in.

Looking at the source for this method it all starts to becomes clear. The method description (just above the listing in the source code, and also in the documentation) says:

Decode a newBitmapfrom anInputStream. ThisInputStreamwas obtained from resources, which we pass to be able to scale the bitmap accordingly.

Here's a copy of the method, which is called with no options passed in along with a new, empty TypedValue.

public static Bitmap decodeResourceStream(Resources res, TypedValue value,

InputStream is, Rect pad, Options opts) {

if (opts == null) {

opts = new Options();

}

if (opts.inDensity == 0 && value != null) {

final int density = value.density;

if (density == TypedValue.DENSITY_DEFAULT) {

opts.inDensity = DisplayMetrics.DENSITY_DEFAULT;

} else if (density != TypedValue.DENSITY_NONE) {

opts.inDensity = density;

}

}

if (opts.inTargetDensity == 0 && res != null) {

opts.inTargetDensity = res.getDisplayMetrics().densityDpi;

}

return decodeStream(is, pad, opts);

}

So density will be TypedValue.DENSITY_DEFAULT, and therefore inDensity in the new options object is set to DisplayMetrics.DENSITY_DEFAULT (160 dpi). The inTargetDensity field gets set to the device's density, which for my HTC Desire is 240 - a value that is 50% larger than 160.

Well, in case it isn't patently clear now, the functionality here is setting things up to have the image scaled to match the density of my device. In general this is probably a good thing - if I was placing the image on the screen on a regular View I'd want it to look the same on devices with different screen densities and this auto-scaling helps there, but in the case of loading an image sized with power-of-two edges and making a texture out of it whilst still maintaining those power-of-two edges this auto-scaling is most definitely not helpful.

To avoid the problem a little referral to the documentation leads us to the inScaled field of the BitmapFactory.Options class. It tells us that if the field is not set (which it automatically is when the class is constructed) then no scaling takes place during the image load.

Changing the original code to start with this now makes everything happy:

var options := new BitmapFactory.Options; options.inScaled := False; var bmp := BitmapFactory.decodeResource(ctx.Resources, resource, options);

To prove the point here are a couple of screenshots. This one is of the emulator, which seems to render this cube (which has had translucency disabled to make the screenshot clearer) at around 18 frames per second (FPS).

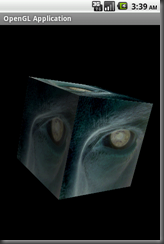

This one comes from the telephone, which renders about three times as quickly, at around 52 FPS. Now, it doesn't take me to point out that this screenshot doesn't look very good.

It seems that the process of taking a screenshot is a repetitive linear process of grabbing various lines from the screen buffer. However if the view is updating itself quickly enough then it will have re-drawn before the screen capture concludes. Indeed it looks like 52 redraws per second is way too many to stand much of a chance of getting a reasonable screen capture.

I'd estimate that maybe the screen updated 25 or so times during that screen capture, which suggests it takes DDMS around half a second to perform the capture on a connected physical Android device!

very nice post. Had the same problem.

ReplyDelete